“Echo, are you spying on me?”

“No, I’m not spying on you. I value your privacy.”

-Me, to my Amazon Echo Dot

So, may I be the first to say: holy shitake mushrooms, my fellow colleagues. There’s an unbelievable amount of things to address in terms of this week’s readings, so I’ll break it down into a cohesive narrative to the best of my ability. I had been adamant about facilitating the discussion on data and surveillance because I recently began researching in this field last semester. I had taken a course within the Urban Education program titled Immigration and the Intersections of Education, Law, and Psychology, and for my term paper I wrote an article draft of my own which is currently titled “Digital Challenges of Immigration: How Technology is Working Against Diverse Bodies.” My article is about the rapid technological/database advancements made within the United States post-September 11th 2001, and how these technologies are working against different body types and fostering potentially dangerous digital environments for populations such as immigrant college students. When Jones, Thomson, and Arnold (briefly) mentioned the term “biomarkers” in their piece“Questions of Data Ownership on Campus” I made the connection to Ruha Benjamin’s explanation of biomarkers from Race After Technology.

According to Benjamin (2019), within these large collections of data, names are encoded with racial markers, which means more than just them signaling cultural background, but it also provides a plethora of historical context. What makes this concept stick out to me is the mention of universities’ misuse/storage of #BigData and the startling ideas behind how these collections can be linked/used. Looking at some of the following quotes, one can’t help but get a severely sinister vibe:

“Rarely are such systems outright coercive, but one could imagine developing such systems by, for instance, linking student activity data from a learning management system to financial aid awards. Rather than relying on end-of-semester grades, an institution might condition aid on keeping up on work performed throughout the semester: reading materials accessed, assignments completed, and so forth.”

“From application to admission through to graduation, students are increasingly losing the ability to find relief from data and information collection. Students are required to reveal sensitive details about their past life and future ambitions, in addition to a host of biographic information, simply to be considered for admission—they are never guaranteed anything in return for these information disclosures.”

“’College students are perhaps the most desirable category of consumers,’ says Emerson’s Newman. ‘They are the trickiest to reach and the most likely to set trends.’ As a result, he says, their data is some of the most valuable and the most likely to be mined or sold.”

“But the company also claims to see much more than just attendance. By logging the time a student spends in different parts of the campus, Benz said, his team has found a way to identify signs of personal anguish: A student avoiding the cafeteria might suffer from food insecurity or an eating disorder; a student skipping class might be grievously depressed. The data isn’t conclusive, Benz said, but it can ‘shine a light on where people can investigate, so students don’t slip through the cracks.’”

For starters, what the hell? Perhaps we should slowly walk through the problematic features of these quotes because, at first glance, the everyday reader (non-edtech enthusiasts) may night pick up on the subtle (or for some, not so subtle) red flags in each quote.

The first red flag I’m referring to is the blatant use of the term “coercion.” This quote was pulled from Jones et al.’s “Student Perspectives on Privacy and Library Participation in Learning Analytics Initiatives,” which focused on the WiFi-based tracking system where all students had to download an app in order for the university to maintain attendance records. I teach my Communications students that what makes “coercion” different than “convincing” is that coercion persuades the target through methods of threats and fear tactics. Suggesting that the future of such technology could be practices such as linking these applications to learning management systems (LMS) in order to turn financial aid into a conditional reward is horrific, ableist, racist, and classist to say the least.

Dangling financial aid like a carrot in front of students in order to get them to perform to a university’s liking is extremely dystopian. This borders heavily into issues of racism & classism because this reward system may not apply to privileged students who do not need to take advantage of financial aid. Students who are coming from lower-income communities are relying on that aid not only to pay tuitions but to have food to eat and a roof over their heads. When the carpet is ripped out from under them due to, let’s say, a medical condition that inhibits their ability to attend class occasionally, who does that benefit? And as we know, the previously mentioned low-income communities are more often than not predominantly minority groups. This is very reminiscent of the introduction to Virginia Eubanks’s book, Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor, which she used to tell a story about how her partner required a life-saving $62,000 surgery. Eubanks’s (2018) insurance company had denied them coverage for the surgery, despite Eubanks’s domestic partner being covered through her insurance at her new place of employment. Upon further investigation, Eubanks (2018) came to the realization that her family had been red-flagged and placed under investigation for fraud. Who is to say these actions aren’t racially influenced by markers within these big data clouds?

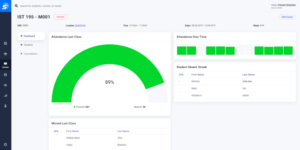

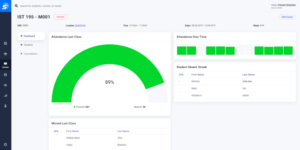

On top of these questionable backend practices, the actual user interface that shows the student attendance/progress mentioned in the same article looks similar to applications like Credit Karma (I’d share a screenshot to show the comparison but I don’t need you guys seeing my credit score #DataPrivacy). What struck me about this was that it resonated with a quote from the same piece about the concept of “cradle-to-grave profiles,” which is essentially when a student is tracked their entire student-careers and beyond that, having these services trail them into their actual professional careers in order to evaluate outcomes.

I apologize but I am fixated on this piece particularly because it addressed so many violations of college student independence in a way that really left me disturbed. These methods of tracking also talk about the ability to locate the exact location of the student, and this would help to keep tabs on their health throughout the semester. As observed in the last quote, by tracking where a student is spending their time on campus, they can guess what they are going through emotionally. Huh?! Making the bold assumption that a student has an eating disorder because they do not spend time in the dining hall is breaking so many social boundaries. If we’re being honest, a lot of universities have crap dining options, I know mine did in undergrad. So if a student remains in their dorm building to cook and study, they may get marked in the database as a hazard or concern of some sort to the university. The more I pry open this article, the more dystopian it feels.

“Because if [my institution] had the intention of using my data to create better programs or better educational tools, then I’m all for it, you know…. But I could also see certain things that are tracked, maybe being a little embarrassing. I initially didn’t go [to the counseling center] for a long time because I was embarrassed, because I knew that the university was going to be able to track that and look at my record and say, “Oh yeah, she’s been going to counseling.” And maybe if they wanted to, they could somehow find out what exactly it was that I was talking to the therapist about.”

We want to assume that these practices aren’t super present in the magical land that is CUNY, but students have experienced similar within our very own Graduate Center. While having a conversation with a peer of mine about the Wellness Center and counseling services provided through our tuition/fees, this person had decided to pursue the counseling services to talk through some stuff. First of all, we only receive SIX (6) sessions a semester as graduate students, like, okay. Second, during the session, my peer was made aware of the fact that the session was being recorded on camera. However, they ensured that there was no audio. Wait, what? Yeah, cameras in the counseling center apparently. I wanted to investigate this for myself but unfortunately have not had the time. We tried to justify it with the idea that psychology doctoral students of specific disciplines needed to meet an hourly requirement, but it just wasn’t enough for us to feel comfortable enough to take advantage of a space where we are expected to be vulnerable.

I’ve been ranting quite a bit at this point, so I’ll start to wrap it up and leave the remainder for our class discussion. With the references I’ve made in this post alone, I want to get across the extreme discomfort I have over the fact that these unethical educational technologies are invading university campuses in a way that threatens the growth/development of every student’s individuality. Higher education is just as much about wellness and personal experiences that influence our decisions just as much as (or at least close to as much as) the ways classrooms shape their futures. I have so much that I want to talk about, so I am looking forward to probing these horrors even deeper with you all tomorrow.